There are few things I enjoy more in a scientific document than a particularly punchy patch of purple prose. In 1939, Truman Kelley had an important insight to share, and I love it that he got his point across by going all in and over the top:

The first earmark of an unimportant factor is its Venus-like birth from the meditations of a Spranger, the libido of a Freud, the hunch of an enthusiastic employment officer, or the dial of a differential analyzer. The second earmark is also Venus-like—the factor stands in virgin purity, untrammeled by clothes of doubt, untouched by considerations of probable error, unqualified by tentative endorsement. The third earmark is almost a consequent of the other two—the factor has never been put to work, it has never served the needs of man in school, in business, in social adaptation. Truly none of these earmarks detract from the beauty of the picture and possibly this Venus will scrub floors as well as win acclaim for harmony of proportion and then, indeed, we shall be blessed. But initially let us call a factor with these earmarks just a Venus-factor and not load it down or trust it with heavy work.

What is the heavy work that is to be done? We have eight million unemployed, another many millions feeling thwarted and believing that could they but find a channel of expression more fitted to their talents, their life would be richer and society of which they are a part would be the better. The heavy work resting squarely upon the shoulders of the typologist, of the mental factorist, of the character analyst, and upon the comprehensiveness, accuracy, and analytical nature of the mental measures that he uses, is to render aid in the mental and social adjustments necessary to alleviate the thwartings mentioned.

This heavy work must not be encumbered with trivial mental factors. Let me suggest a Factor-of-no-importance that might be derived by the method of statistical analysis of test data. If tests of taste sensitivity to para-ethoxy-phenol-thio-carbamide were included in a series of tests to be factorized by matrix methods I have no doubt that a taste trait would evolve as an independent factor. Certainly it is real, psychologically real, perhaps genetically real, but still in comparison with those mental, sensory, and motor things that underlie the adequate adjustment of individuals in the society in which we live it certainly is a Factor-of-no-importance. I can, by a vigorous stretch of the imagination, conceive of a society in which such a factor would be important. I do not object to students spending their time upon such factors with the hope of discovering something of genetic or other importance, but I do insist that evidence of existence of a factor be not cited as evidence that it is important in the meeting of pressing guidance and social problems. (pp. 140–141)

Kelley, T. L. (1939). Mental factors of no importance. Journal of Educational Psychology, 30(2), 139–142. https://doi.org/10.1037/h0056336

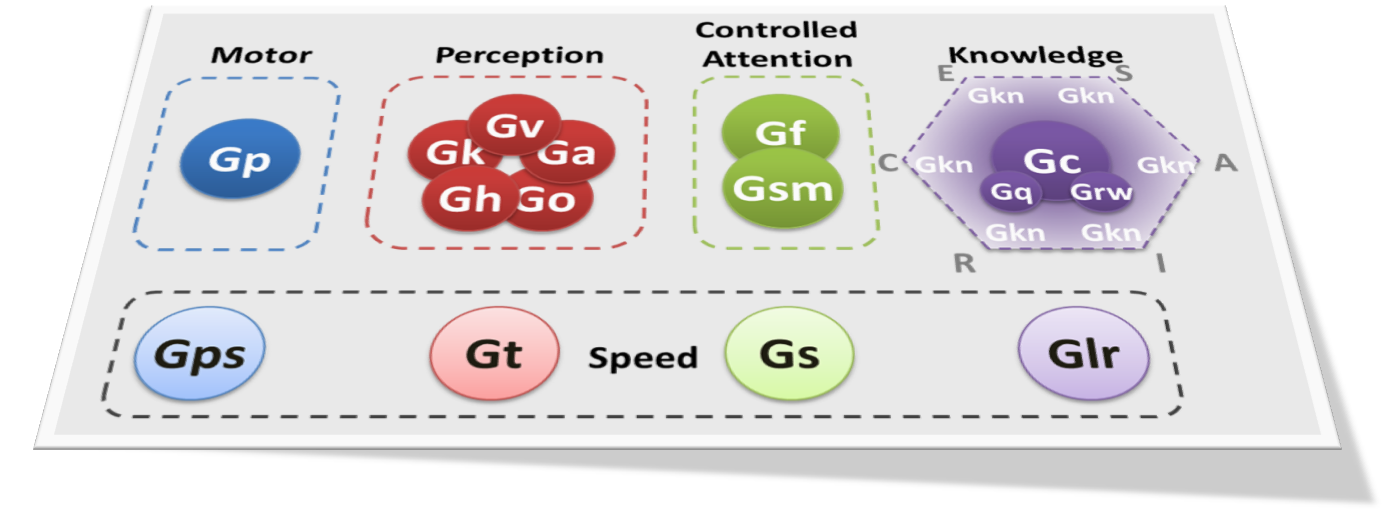

In his 1993 masterwork, Human Cognitive Abilities, A Survey of Factor-Analytic Studies, John Carroll occasionally noted that some of the factors he identified had no known utility at the time of writing. To prevent endless proliferation of useless ability constructs, Kevin McGrew and I (Schneider & McGrew, 2018) proposed that new ability constructs should meet the following criteria:

- The content domain of a new ability must be laid out clearly.

- The new ability must be measurable with performance tests using multiple test paradigms.

- Measures of the new ability must demonstrate convergent and discriminant validity when measured alongside other abilities.

- Measures of the new ability must demonstrate incremental validity over measures of other more established abilities when predicting important outcomes.

- The new ability construct should be linked plausibly to specific neurological functions.

- The new ability construct should be linked plausibly to functions that evolved to help humans survive and reproduce.

These criteria were inspired by similar proposals by Raymond Cattell, Howard Gardner, and John Mayer and his colleagues.

A systematic and thorough review of the evidence of each ability construct in CHC theory is sorely needed. Some of the broad CHC abilities have been vetted thoroughly enough such that they clearly meet all these criteria, but others have not. Most narrow abilities have long languished in the preliminary stages of construct validation. So much work to do…