I do not relish criticizing published studies. However, if a paper uses flawed reasoning to arrive at counterproductive recommendations for our field, I believe that it is proper to respectfully point out why the paper’s conclusions should be ignored. This study warrants such a response:

The authors of this study ask whether children with learning disorders have the same structure of intelligence as children in the general population. This might seem like an important question, but it is not—if the difference in structure is embedded in the very definition of learning disorders.

An Analogously Flawed Study

Imagine that a highly respected medical journal published a study titled Tall People Are Significantly Greater in Height than People in the General Population. Puzzled and intrigued, you decide to investigate. You find that the authors solicited medical records from physicians who labelled their patients as tall. The primary finding is that such patients have, on average, greater height than people in the general population. The authors speculate that the instruments used to measure height may be less accurate for tall people and suggest alternative measures of height for them.

This imaginary study is clearly ridiculous. No researcher would publish such a “finding” because it is not a finding. People who are tall have greater height than average by definition. There is no reason to suppose that the instruments used were inaccurate.

Things That Are True By Definition Are Not Empirical Findings.

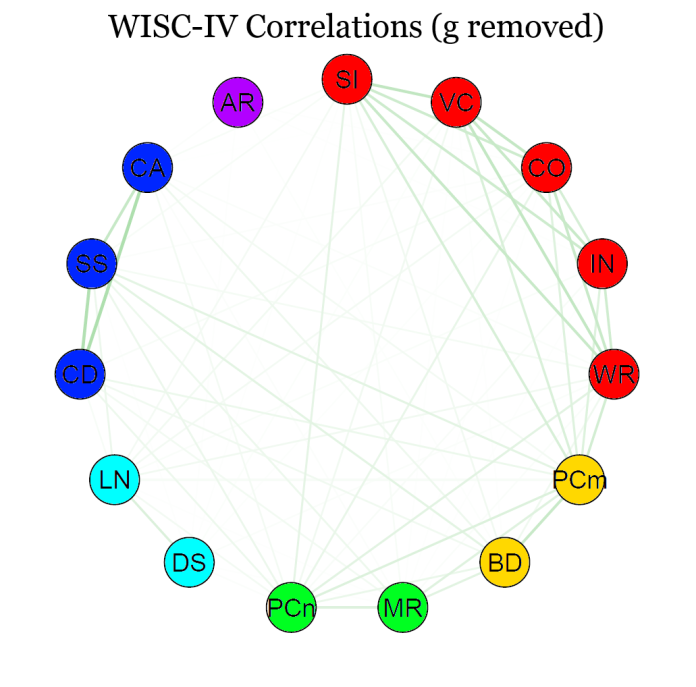

It is not so easy to recognize that Giofrè and Cornoldi applied the same flawed logic to children with learning disorders and the structure of intelligence. Their primary finding is that in a sample of Italian children with clinical diagnoses of specific learning disorder, the four index scores of the WISC-IV have lower g-loadings than they do in the general population in Italy. The authors believe that this result implies that alternative measures of intelligence might be more appropriate than the WISC-IV for children with specific learning disorders.

What is the problem with this logic? The problem is that the WISC-IV was one of the tools used to diagnose the children in the first place. Having unusual patterns somewhere in one’s cognitive profile is part of the traditional definition of learning disorders. If the structure of intelligence were the same in this group, we would wonder if the children had been properly diagnosed. This is not a “finding” but an inevitable consequence of the traditional definition of learning disorders. Had the same study been conducted with any other cognitive ability battery, the same results would have been found.

People with Learning Disorders Have Unusual Cognitive Profiles.

A diagnosis of a learning disorder is often given when a child of broadly average intelligence has low academic achievement due to specific cognitive processing deficits. To have specific cognitive processing deficits, there must be a one or more specific cognitive abilities that are low compared to the population and also to the child’s other abilities. For example, in the profile below, the WISC-IV Processing Speed Index of 68 is much lower than the other three WISC-IV index scores, which are broadly average. Furthermore, the low processing speed score is a possible explanation of the low Reading Fluency score.

The profile above is unusual. The Processing Speed (PS) score is unexpectedly low compared to the other three index scores. This is just one of many unusual score patterns that clinicians look for when they diagnose specific learning disorders. When we gather together all the unusual WISC-IV profiles in which at least one score is low but others are average or better, it comes as no surprise that the structure of the scores in the sample is unusual. Because the scores are unusually scattered, they are less correlated, which implies lower g-loadings.

A Demonstration That Selecting Unusual Cases Can Alter Structural Coefficients

Suppose that the WISC-IV index scores have the correlations below (taken from the U.S. standardization sample, age 14).

| VC | PR | WM | PS | |

|---|---|---|---|---|

| VC | 1.00 | 0.59 | 0.59 | 0.37 |

| PR | 0.59 | 1.00 | 0.48 | 0.45 |

| WM | 0.59 | 0.48 | 1.00 | 0.39 |

| PS | 0.37 | 0.45 | 0.39 | 1.00 |

Now suppose that we select an “LD” sample from the general population all scores in which

- At least one score is less than 90.

- The remaining scores are greater than 90.

- The average of the three highest scores is at least 15 points higher than the lowest score.

Obviously, LD diagnosis is more complex than this. The point is that we are selecting from the general population a group of people with unusual profiles and observing that the correlation matrix is different in the selected group. Using the R code at the end of the post, we see that the correlation matrix is:

| VC | PR | WM | PS | |

|---|---|---|---|---|

| VC | 1.00 | 0.15 | 0.18 | −0.30 |

| PR | 0.15 | 1.00 | 0.10 | −0.07 |

| WM | 0.18 | 0.10 | 1.00 | −0.20 |

| PS | −0.30 | −0.07 | −0.20 | 1.00 |

A single-factor confirmatory factor analysis of the two correlation matrices reveals dramatically lower g-loadings in the “LD” sample.

| Whole Sample | “LD” Sample | |

|---|---|---|

| VC | 0.80 | 0.60 |

| PR | 0.73 | 0.16 |

| WM | 0.71 | 0.32 |

| PS | 0.53 | −0.51 |

Because the PS factor has the lowest g-loading in the whole sample, it is most frequently the score that is out of sync with the others and thus is negatively correlated with the other tests in the “LD” sample.

In the paper referenced above, the reduction in g-loadings was not nearly as severe as in this demonstration, most likely because clinicians frequently observe specific processing deficits in tests outside the WISC. Thus many people with learning disorders have perfectly normal-looking WISC profiles; their deficits lie elsewhere. A mixture of ordinary and unusual WISC profiles can easily produce the moderately lowered g-loadings observed in the paper.

Conclusion

In general, one cannot select a sample based on a particular measure and then report as an empirical finding that the sample differs from the population on that same measure. I understand that in this case it was not immediately obvious that the selection procedure would inevitably alter the correlations among the WISC-IV factors. It is clear that the authors of the paper submitted their research in good faith. However, I wish that the reviewers had noticed the problem and informed the authors that the paper was fundamentally flawed. Therefore, this study offers no valid evidence that casts doubt on the appropriateness of the WISC-IV for children with learning disorders. The same results would have occurred with any cognitive battery, including those recommended by the authors as alternatives to the WISC-IV.

R code used for the demonstration

# Correlation matrix from U.S. Standardization sample, age 14

WISC <- matrix(c(

1,0.59,0.59,0.37, #VC

0.59,1,0.48,0.45, #PR

0.59,0.48,1,0.39, #WM

0.37,0.45,0.39,1), #PS

nrow= 4, byrow=TRUE)

colnames(WISC) <- rownames(WISC) <- c("VC", "PR", "WM", "PS")

#Set randomization seed to obtain consistent results

set.seed(1)

# Generate data

x <- as.data.frame(mvtnorm::rmvnorm(100000,sigma=WISC)*15+100)

colnames(x) <- colnames(WISC)

# Lowest score in profile

minSS <- apply(x,1,min)

# Mean of remaining scores

meanSS <- (apply(x,1,sum) - minSS) / 3

# LD sample

xLD <- x[(meanSS > 90) & (minSS < 90) & (meanSS - minSS > 15),]

# Correlation matrix of LD sample

rhoLD <- cor(xLD)

# Load package for CFA analyses

library(lavaan)

# Model for CFA

m <- "g=~VC + PR + WM + PS"

# CFA for whole sample

summary(sem(m,x),standardized=TRUE)

# CFA for LD sample

summary(sem(m,xLD),standardized=TRUE)